Vox-Pol Conf Twitter Community

2018/08/22

This is a short notebook scraping tweets related to the Vox-Pol Conference 2018 in Amsterdam. As this was again a very inspiring Vox-Pol event I thought it was time to further explore the twitter community.

Packages

Load the necessary packages

# install pacman once if not avaible on your machine

# install.packages("pacman")

pacman::p_load(tidyverse, purrr, tidyr, rtweet, stringr, ggraph, igraph, tidygraph, forcats)Get Data

Call Twitter API. If you want to scrape data yourself you have to register a free account where you get your personal access point to Twitter. Check out rtweet on github and follow their instructions to the twitter authentication.

twitter_token <- readRDS("twitter_token.rds")

rt <- search_tweets(

"#VOXPolConf18 OR #VOXPolConf2018", n = 2000, include_rts = T, retryonratelimit = T

)

save(rt, file = "rt.Rdata")Lets first look at the data structure and column names. Twitter returns a huge amount of data.

rt %>% glimpse # the same as str, returns a df overview## function (n, df, ncp)The top ten retweeted tweets.

# load("rt.Rdata")

rt %>%

select(screen_name, text, retweet_count) %>%

filter(!str_detect(text, "^RT")) %>%

mutate(text = str_replace_all(text, "\\\n", " ")) %>%

arrange(desc(retweet_count)) %>%

top_n(n = 10) %>%

knitr::kable(., format = "markdown")| screen_name | text | retweet_count |

|---|---|---|

| MubarazAhmed | ISIS jihadis remain keen on returning to mainstream social media platforms for recruitment purposes, don’t just want to be talking to each other on Telegram, says @AmarAmarasingam. #voxpolconf18 https://t.co/JwQLqakmF6 | 28 |

| intelwire | It’s happening!!! #voxpolconf18 https://t.co/XV7nWCG6vN | 21 |

| MubarazAhmed | Fascinating findings presented by @AmarAmarasingam on languages used in Telegram communications by jihadi groups. Arabic remains integral to ISIS on Telegram, but there is also a surprisingly high level of activity in Persian. Cc: @KasraAarabi #voxpolconf18 https://t.co/7YVFUr2WBD | 19 |

| FabioFavusMaxim | Very excited to have presented our research on the Alt-Right with @systatz at #voxpolconf2018. Received some great suggestions by @miriam_fs. to improve our analysis, which is definitely something we’ll implement. You can check out our slides here: https://t.co/uGV7es8VhF https://t.co/pz3113fPZN | 19 |

| VOX_Pol | We look forward to seeing many of you in Amsterdam next week for #voxpolconf18. Most up-to-date version of the Conference Programme is at https://t.co/SLzRsN2y6E and also below. https://t.co/aw5IdEWcRD | 17 |

| ErinSaltman | Present & future trends within violent extremism & terrorism; new tech, new tactics, old problems, old groups. Pleasure & privilege to share panel discussion with @intelwire @techvsterrorism @p_vanostaeyen moderated by @VOX_Pol @galwaygrrl. Big Qs at #voxpolconf18 !! https://t.co/fBnlrEe4c2 | 17 |

| lizzypearson | Really looking forward to @VOX_Pol Amsterdam conference where I’m talking UK Islamist offline reflections on online. Plus! seeing presentations by @Swansea_Law colleagues @CTProject_JW on online Jihadism in the US and @CTP_ALW on Britain First imagery in the UK #voxpolconf18 https://t.co/vGDsJgZM4I | 15 |

| AmarAmarasingam | Day 2: @pieternanninga talks about the dramatic drop in ISIS video releases from 2015 to 2018. #VOXpolconf18 https://t.co/ipJYXzXIUI | 14 |

| MoignKhawaja | .@AmarAmarasingam giving a very interesting presentation on how jihadists are using @telegram as a platform for various purposes including propaganda dissemination here at .@VOX_Pol #VOXPolConf2018 day 1 session 2 chaired by @galwaygrrl https://t.co/NOLTPDUn1G | 13 |

| Drjohnhorgan | Follow #VoxPolConf18 this week to learn about new research on terrorism, extremism and everything in between | 13 |

| MiloComerford | Important corrective on online extremism from @MubarazAhmed’s research at #VOXPolConf18 - large proportion of traffic to extremist websites comes from searches, not social media. @VOX_Pol https://t.co/JVGIXouaa4 | 13 |

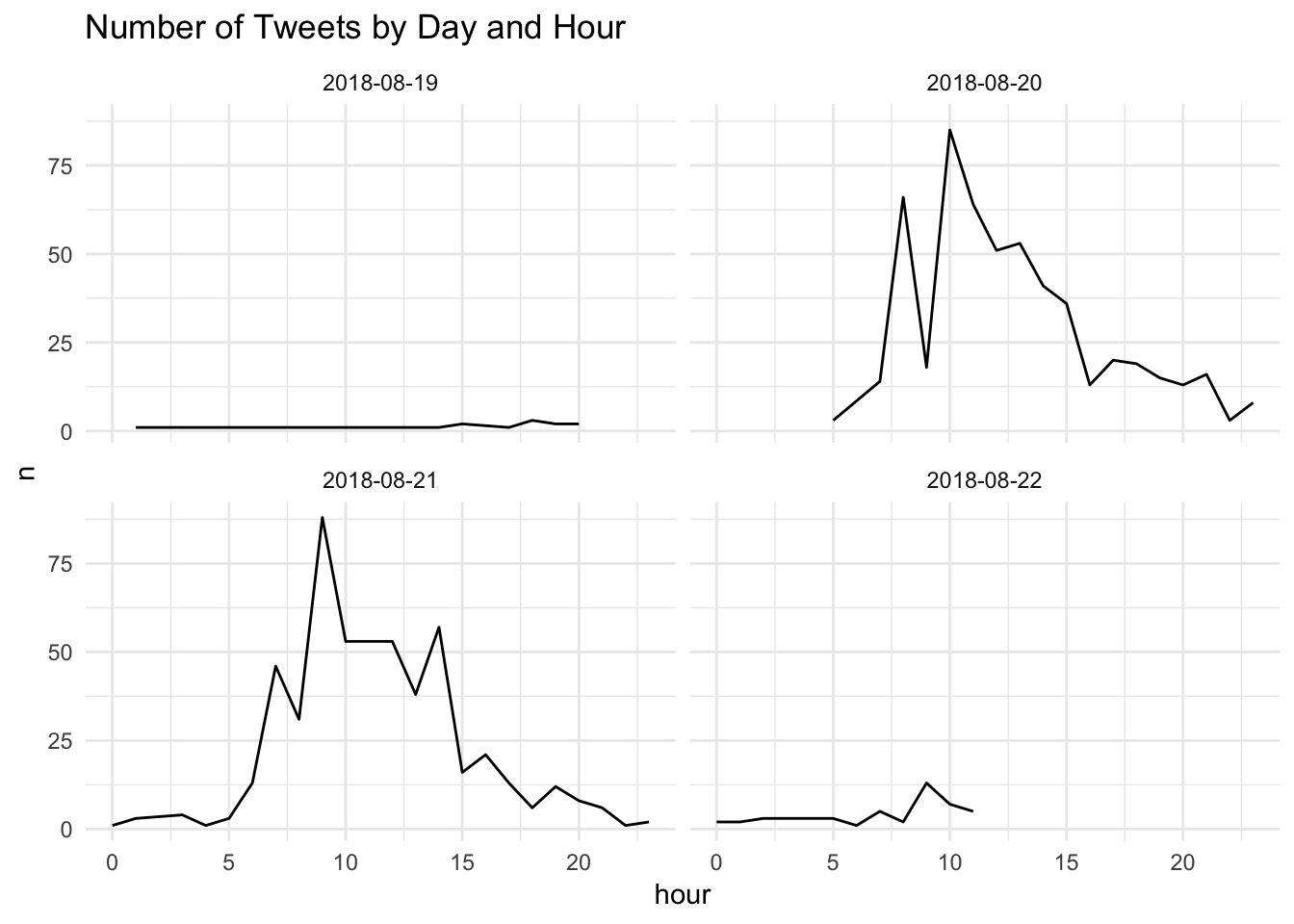

Timeline

What was the best time to tweet?

rt %>%

## parse date format

mutate(

cdate = created_at %>%

str_extract("\\d{4}-\\d{2}-\\d{2}") %>%

lubridate::ymd(),

hour = lubridate::hour(created_at)

) %>%

## select relevant time period

filter(cdate >= as.Date("2018-08-19")) %>%

## count tweet per and and hour

group_by(cdate, hour) %>%

tally %>%

ungroup %>%

ggplot(aes(hour, n)) +

geom_line() +

## split the visualization

facet_wrap(~cdate, ncol = 2) +

theme_minimal() +

ggtitle("Number of Tweets by Day and Hour")

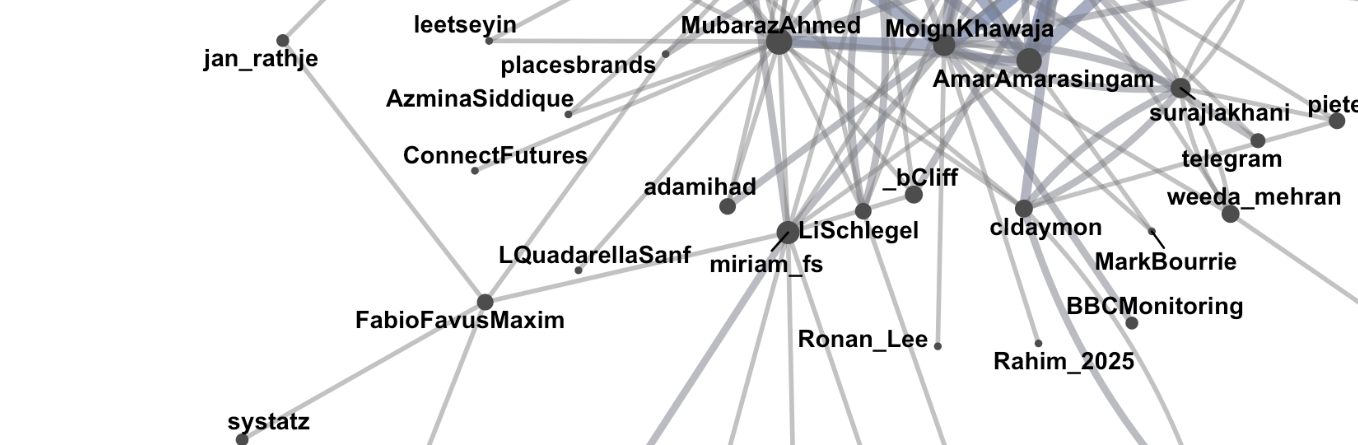

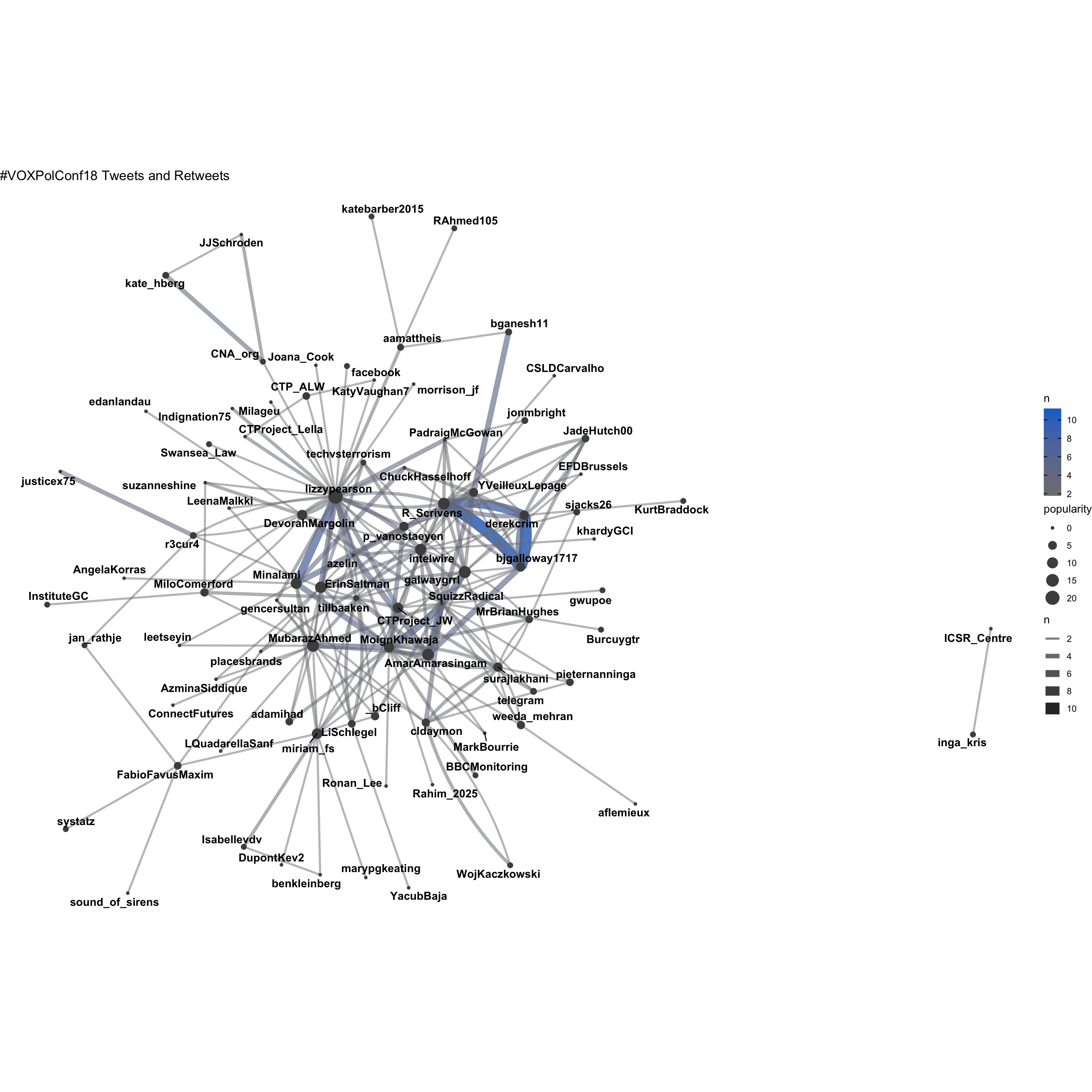

Retweet Network

rt_graph <- rt %>%

## select relevant variables

dplyr::select(screen_name, mentions_screen_name) %>%

## unnest list of mentions_screen_name

unnest %>%

## count the number of coocurences

filter(!(screen_name == "VOX_Pol" | mentions_screen_name == "VOX_Pol")) %>%

group_by(screen_name, mentions_screen_name) %>%

tally(sort = T) %>%

ungroup %>%

## drop missing values

drop_na %>%

## iflter those coocurences that appear at least 2 times

filter(n > 1) %>%

## transforming the dataframe to a graph object

as_tbl_graph() %>%

## calculating node centrality

mutate(popularity = centrality_degree(mode = 'in'))

rt_graph %>%

## create graph layout

ggraph(layout = "kk") +

## define edge aestetics

geom_edge_fan(aes(alpha = n, edge_width = n, color = n)) +

## scale down link saturation

scale_edge_alpha(range = c(.5, .9)) +

## define note size param

scale_edge_color_gradient(low = "gray50", high = "#1874CD") +

geom_node_point(aes(size = popularity), color = "gray30") +

## define node labels

geom_node_text(aes(label = name), repel = T, fontface = "bold") +

## equal width and height

coord_fixed() +

## plain theme

theme_void() +

## title

ggtitle("#VOXPolConf18 Tweets and Retweets")

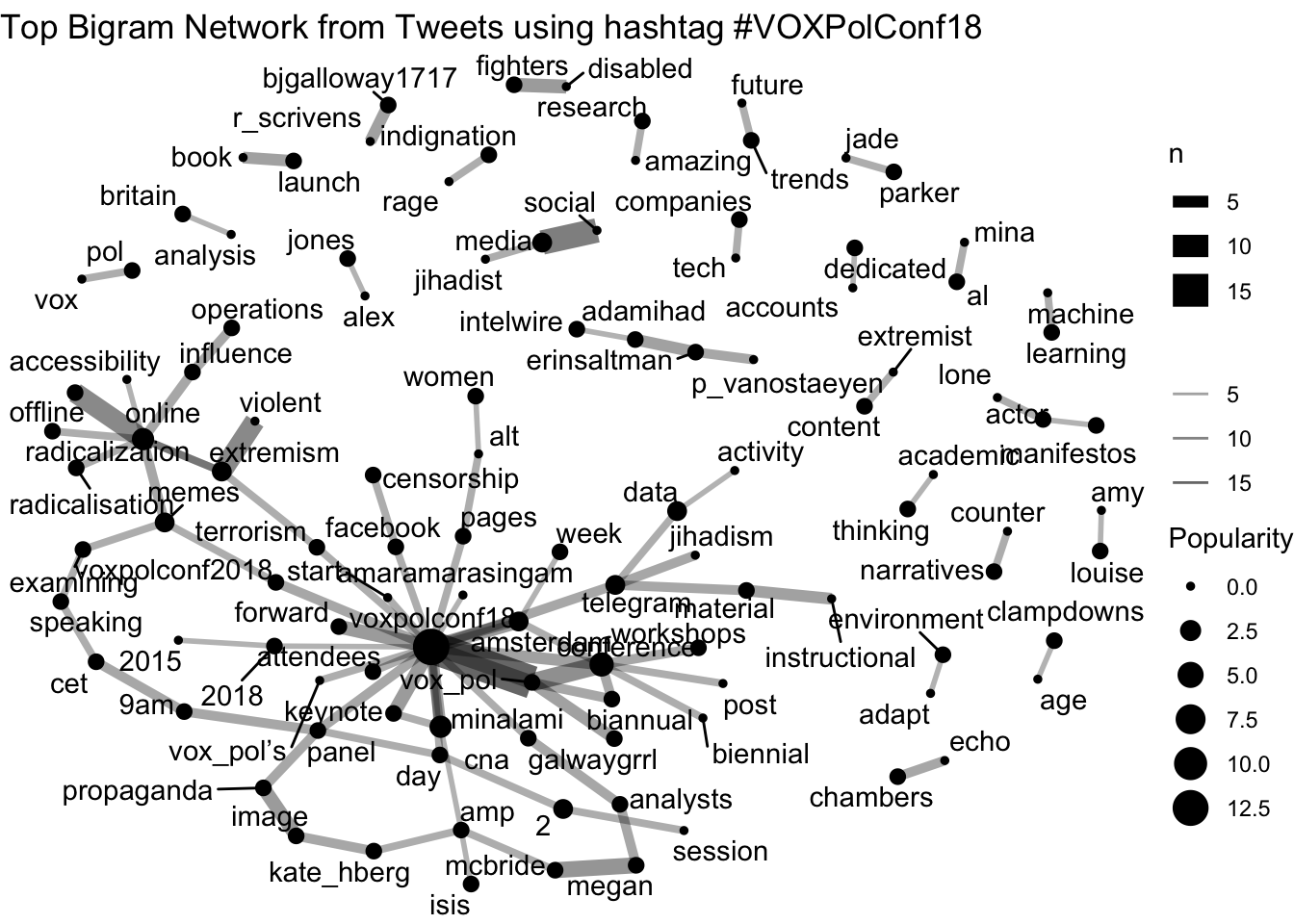

Most Frequent Bigram Network

gg_bigram <- rt %>%

select(text) %>%

## remove text noise

mutate(text = stringr::str_remove_all(text, "w |amp ")) %>%

## remove retweets

filter(!stringr::str_detect(text, "^RT")) %>%

## remove urls

mutate(text = stringr::str_remove_all(text, "https?[:]//[[:graph:]]+")) %>%

mutate(id = 1:n()) %>%

## split text into words

tidytext::unnest_tokens(word, text, token = "words") %>%

## remove stop words

anti_join(tidytext::stop_words) %>%

## paste words to text by id

group_by(id) %>%

summarise(text = paste(word, collapse = " ")) %>%

ungroup %>%

## again split text into bigrams (word occurences or collocations)

tidytext::unnest_tokens(bigram, text, token = "ngrams", n = 2) %>%

separate(bigram, c("word1", "word2"), sep = " ") %>%

## count bigrams

count(word1, word2, sort = T) %>%

## select first 90

slice(1:100) %>%

drop_na() %>%

## create tidy graph object

as_tbl_graph() %>%

## calculate node centrality

mutate(Popularity = centrality_degree(mode = 'in'))gg_bigram %>%

ggraph() +

geom_edge_link(aes(edge_alpha = n, edge_width = n)) +

geom_node_point(aes(size = Popularity)) +

geom_node_text(aes(label = name), repel = TRUE) +

theme_void() +

scale_edge_alpha("", range = c(0.3, .6)) +

ggtitle("Top Bigram Network from Tweets using hashtag #VOXPolConf18")

sessionInfo()## R version 3.5.0 (2018-04-23)

## Platform: x86_64-apple-darwin15.6.0 (64-bit)

## Running under: macOS High Sierra 10.13.6

##

## Matrix products: default

## BLAS: /Library/Frameworks/R.framework/Versions/3.5/Resources/lib/libRblas.0.dylib

## LAPACK: /Library/Frameworks/R.framework/Versions/3.5/Resources/lib/libRlapack.dylib

##

## locale:

## [1] de_DE.UTF-8/de_DE.UTF-8/de_DE.UTF-8/C/de_DE.UTF-8/de_DE.UTF-8

##

## attached base packages:

## [1] stats graphics grDevices utils datasets methods base

##

## other attached packages:

## [1] bindrcpp_0.2.2 tidygraph_1.1.0 igraph_1.2.2

## [4] ggraph_1.0.1.9999 rtweet_0.6.20 forcats_0.3.0

## [7] stringr_1.3.1 dplyr_0.7.6 purrr_0.2.5

## [10] readr_1.1.1 tidyr_0.8.1 tibble_1.4.2

## [13] ggplot2_3.0.0 tidyverse_1.2.1

##

## loaded via a namespace (and not attached):

## [1] ggrepel_0.8.0 Rcpp_0.12.18 lubridate_1.7.4

## [4] lattice_0.20-35 deldir_0.1-15 assertthat_0.2.0

## [7] digest_0.6.15 ggforce_0.1.1 R6_2.3.0

## [10] cellranger_1.1.0 plyr_1.8.4 backports_1.1.2

## [13] evaluate_0.12 httr_1.3.1 highr_0.6

## [16] blogdown_0.8.4 pillar_1.2.3 rlang_0.2.2

## [19] lazyeval_0.2.1 readxl_1.1.0 rstudioapi_0.8

## [22] Matrix_1.2-14 rmarkdown_1.10.14 labeling_0.3

## [25] tidytext_0.1.9 polyclip_1.9-1 munsell_0.5.0

## [28] broom_0.5.0 janeaustenr_0.1.5 compiler_3.5.0

## [31] modelr_0.1.2 xfun_0.3 pkgconfig_2.0.1

## [34] htmltools_0.3.6 openssl_1.0.1 tidyselect_0.2.4

## [37] gridExtra_2.3 bookdown_0.7 viridisLite_0.3.0

## [40] crayon_1.3.4 withr_2.1.2 SnowballC_0.5.1

## [43] MASS_7.3-49 grid_3.5.0 nlme_3.1-137

## [46] jsonlite_1.5 gtable_0.2.0 pacman_0.4.6

## [49] magrittr_1.5 concaveman_1.0.0 tokenizers_0.2.1

## [52] scales_1.0.0 cli_1.0.0 stringi_1.2.4

## [55] farver_1.0 viridis_0.5.1 xml2_1.2.0

## [58] htmldeps_0.1.1 tools_3.5.0 glue_1.3.0

## [61] tweenr_0.1.5.9999 hms_0.4.2 yaml_2.2.0

## [64] colorspace_1.3-2 rvest_0.3.2 knitr_1.20

## [67] bindr_0.1.1 haven_1.1.2